Breaking open the “black box”: How risk assessments undermine judges’ perceptions of young people

It's complicated enough for judges to weigh the influence of youth in their decisions. A new paper argues that algorithmic risk assessments may further muddy the waters.

by Wendy Sawyer, August 22, 2018

Imagine that you’re a judge sentencing a 19-year-old woman who was roped into stealing a car with her friends one night. How does her age influence your decision? Do you grant her more leniency, understanding that her brain is not yet fully developed, and that peers have greater influence at her age? Or, given the strong link between youth and criminal behavior, do you err on the side of caution and sentence her to a longer sentence or closer supervision?

Now imagine that you’re given a risk assessment score for the young woman, and she is labelled “high risk.” You don’t know much about the scoring system except that it’s “evidence-based.” Does this new information change your decision?

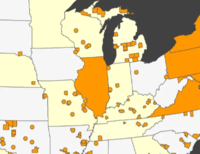

For many judges, this dilemma is very real. Algorithmic risk assessments have been widely adopted by jurisdictions hoping to reduce discrimination in criminal justice decision-making, from pretrial release decisions to sentencing and parole. Many critics (including the Prison Policy Initiative) have voiced concerns about the use of these tools, which can actually worsen racial disparities and justify more incarceration. But in a new paper, law professors Megan T. Stevenson and Christopher Slobogin consider a different problem: how algorithms weigh some factors — in this case, youth — differently than judges do. They then discuss the ethical and legal implications of using risk scores produced by those algorithms to make decisions, especially when judges don’t fully understand them.

For their analysis, Stevenson and Slobogin reverse engineer the COMPAS Violent Recidivism Risk Score (VRRS),1 one of the leading “black box”2 risk assessment tools. They find that “roughly 60% of the risk score it produces is attributable to age.” Specifically, youth corresponds with higher average risk scores, which decline sharply from ages 18 to 30. “Eighteen year old defendants have risk scores that are, on average, twice as high as 40 year old defendants,” they find. The COMPAS VRRS is not unique in its heavy-handed treatment of age: The authors also review seven other publicly-available risk assessment algorithms, finding all of them give equal or greater weight to youth than to criminal history.3

To a certain extent, it makes sense that these violent recidivism risk scores rely heavily on age. Criminologists have long observed the relationship between age and criminal offending: young people commit crime at higher rates than older people, and violent crime peaks around age 21.

But for courts, the role of a defendant’s youth is not so simple: yes, youth is a factor that increases risk, but it also makes young people less culpable. Stevenson and Slobogin refer to this phenomenon as a “double-edged sword” and point out that there are other factors besides youth that are treated as both aggravating and mitigating, such as mental illness or disability, substance use disorders, and even socioeconomic disadvantage. While a judge might balance the effects of youth on risk versus culpability, algorithmic risk assessments treat youth as a one-dimensional factor, pointing only to risk.

In theory, judges can still consider whether a defendant’s youth makes them less culpable. But, Stevenson and Slobogin argue, even this separate consideration may be influenced by the risk score, which confers a stigmatizing label upon the defendant. For example, the judge may see a score characterizing a young defendant simply as “high risk” or “high risk of violence,” with no explanation of the factors that led to that conclusion.

This “high risk” label is both imprecise and fraught, implying inherent dangerousness. It can easily affect the judge’s perception of the defendant’s character and, in turn, impact the judge’s decision. Yet in all likelihood, the defendant’s age could be the main reason for the “high risk” label. Through this process, “judges might unknowingly and unintentionally use youth as a blame-aggravator,” where they would otherwise treat youth as a mitigating factor. “That result is illogical and unacceptable,” the authors rightly conclude.

This is not some hypothetical: Even when these algorithms are made publicly available, many judges admit they don’t really know what goes into the scores reported to them. Stevenson and Slobogin point to a recent survey of Virginia judges that found only 29% are “very familiar” with the risk assessment tool they use; 22% are “unfamiliar” or “slightly familiar” with it. (This is in a state where the risk scores come with explicit sentencing recommendations.) When judges rely on risk scores but are unaware of what and how factors affect those scores, chances are good that their decisions will be inconsistent with their own reasoning about how these factors should be considered.

The good news is that this “illogical and unacceptable” result is not inevitable, even as algorithmic risk assessment tools are being rolled out in courts across the country. Judges retain discretion in their use of risk scores in decision-making. With training and transparency — including substantive guidance on proprietary algorithms like COMPAS — judges can be equipped to thoughtfully weigh the mitigating and aggravating effects of youth and other “double-edged sword” factors.

Stevenson and Slobogin provide specific suggestions about how to improve transparency where risk assessments are used, and courts would be wise to demand these changes. Relying upon risk assessments without fully understanding them does nothing to help judges make better decisions. In fact, risk assessments can unwittingly lead courts to make the same discriminatory judgments that these tools are supposed to prevent.

Footnotes

- More precisely, the authors were able to complete a “partial reverse-engineer” with the limited available data. Their model includes seven factors: age, current charges, juvenile criminal history, race, number of prior arrests, prior incarceration, and gender. They did not have access to all of the data used in the COMPAS VRRS, such as vocational and educational measures. While a complete reverse-engineer would explain 100% of the variation in the VRRS, the seven factors they used collectively explain 72% of the variation in VRRS. ↩

- “Black box” algorithms are those with are not made available to public (or even court) scrutiny. Data goes in and a risk score comes out, but exactly how those factors were used to calculate the risk score is not explained, and therefore cannot be challenged by defendants who are subject to decisions influenced by those risk scores. Another recent article on the subject points to the case of Eric Loomis, who was sentenced based partly on his “high risk” COMPAS score. Loomis argued that the opacity of the COMPAS algorithm violated his right to due process since he could not challenge the tool’s validity, but the Wisconsin Supreme Court denied his request to review the COMPAS algorithm. ↩

- Youth here is defined as being 18 versus 50; criminal history benchmarks ranged from 3-6 prior arrests to five or more prior felony convictions. On average, these instruments ascribe 40% more importance to youth than criminal history. Of particular note, in the widely-used Public Safety Assessment (PSA), “the fact that an individual is under 23 contributes as much to the risk score as having three or more prior violent convictions.” ↩